Introduction

Software development has been around since the 1940s.

We started with punch cards, then machine language, followed by assembly, high-level programming languages, low code, no code, and now AI-assisted coding.

Along the way, several tools have been developed to make programmers' jobs easier, from card sorters and verifiers to debuggers and IDEs.

Now, with the advent of AI, we have large language models (LLMs) writing code for us, but I don't think it's quite there yet.

In this article we'll see how AI assists developers, what it can do for us today, its limitations, and where it's headed.

The concept of AI began in the 1950s when researchers tried to imbue machines with the magic to think. Early systems followed set rules, but as computers improved and data became more available, smarter methods emerged, such as machine learning, natural language processing, and neural networks.

Large Language Models (LLMs) grew from these advances, using huge amounts of data and computing power to understand and create language. This marked a shift from fixed rules to models that learn on their own.

By 2025, AI has taken root in most fields, even in places we might not have expected.

For example, robotic bees — tiny drones designed to mimic bee behavior, are now being used to assist with pollination in areas where natural bee populations are struggling. These drones combine machine learning and computer vision for navigation, flight control, pollination strategies, and swarm intelligence.

References

Usage

Copilot is integrated with both Visual Studio Code and Visual Studio, and it comes with a few LLMs built in by default.

Currently, these include Claude Sonnet 3.5, GPT-4o, o3-mini, and Gemini Flash 2.0.

If you want to add more models, you'll need a subscription for Copilot Pro.

We can use Copilot Chat to prompt these models directly in the sidebar chat, whether to generate a specific functionality or create an entirely new file.

Here, I asked it to create a simple sales order.

Notably, it kept the key details — Customer, Item, and Quantity — as parameters without requiring any input.

From here, we can click a button to apply the changes to the open file.

At the bottom, we can see which file Copilot is currently using as a reference.

If we want to stop Copilot from referencing that file, we can click the eye button.

We can also ask it to make changes to the generated code.

Now, I noticed that while it has parameterized the "Customer No." for the sales order, it hasn't actually used it anywhere in the code.

If I point this out to Copilot...

Instead of using Copilot Chat, we can also get recommendations directly within the file.

Here, I'm trying to write a function to delete a sales order based on the given SO No.

I can just tab my way into writing the method.

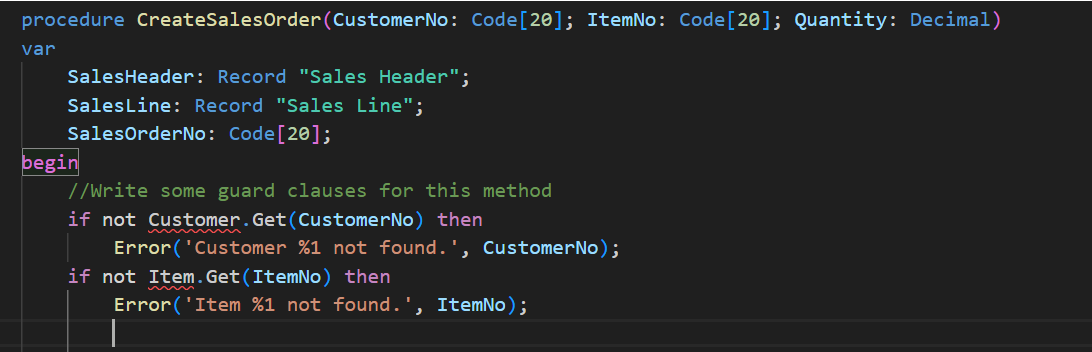

One common way I've used copilot is to add Guard clauses to methods that I've written.

For instance -

Here, it is referring to Customer and Item record variables, which don't exist yet.

But if I go to the variables section then it knows what I'm trying to do and suggests the same.

Now, if I were to make it handle something complex, that's when the cracks start to show.

For example, pulling data from an API and creating customers would require several steps — authenticating with the API, fetching the data, parsing it, handling errors or logging, and finally creating the customers.

We get the following as an output -

Here, we can see that while it has a surface-level understanding of the code structure and the steps needed to achieve the goal, it struggles with the details.

This could be because, unlike open-source languages like Java, Python, or C++, there isn't as much publicly available source code for AL.

I believe Microsoft Documentation would have helped to some degree, but instead, it tends to guess what the correct methods or fields should be.

To its credit, the generated code isn't far off from being functional, especially considering the simplicity of the input prompt. The structure it provides is still a solid starting point and much better than writing everything from scratch.

Another example of these "hallucinations" is when it suggests methods that don't actually exist, like this-

However, once you show it what the correct method is, it suggests that -

To go one step further, I asked the different models to create an entire project based on the below prompt -

Findings:

o3-mini

1. The objects it generated had the fewest errors.

2. It was the simplest and closest to compiling successfully.

3. It returned all the text in a single response, so I had to manually create files from it.

GPT-4o

1. Created a Readme.md with project requirement details.

2. Automatically generated the necessary project files.

3. Farthest from compiling successfully, with most requirements missed.

4. There were plenty of hallucinations, including methods that don't exist in AL at all - like this example below.

Gemini Flash 2.0

1. Created a Readme.md with project requirement details.

2. Automatically generated the necessary project files.

3. Added launch.json, settings.json, and app.json.

4. Didn't meet all requirements but managed to lay some groundwork.

5. Struggled with code structure in several places, though still significantly better than GPT-4o.

6. Had at least a couple of pages with zero errors.

Claude Sonnet 3.5

1. Created a Readme.md with project requirement details.

2. Automatically generated the necessary project files.

3. Added launch.json and app.json.

4. Included a test codeunit, though it had errors.

5. Created a permission set for the objects generated.

6. All files had one or more errors.

In my opinion, Claude and o3-mini are the most useful for coding assistance.

HumanEval is a test developed by OpenAI to assess how well language models can write code.

It includes 164 programming problems where the model must generate accurate and functional Python code.

The HumanEval leaderboard aligns with my assessment as well.

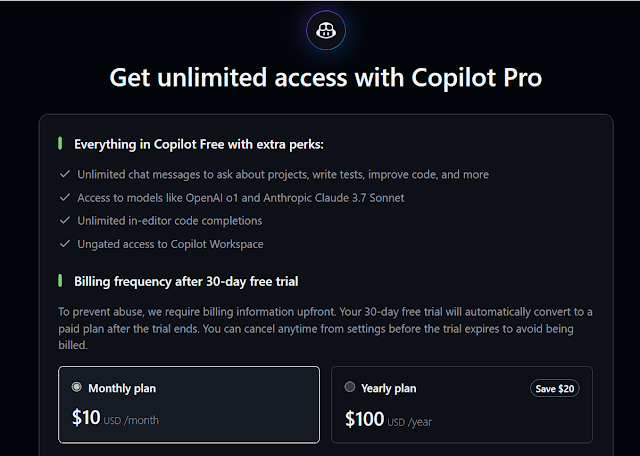

Pricing

While all these models offer a free trial with a limited set of tokens, they can become quite expensive if you don’t monitor your usage.

Below is the pricing chart for the "Pay-as-you-go" plans:

As well as subscription plans:

Claude: Ranges from $0 (free) to $25 (Team) per month.

ChatGPT: Ranges from $0 (free) to $200 (Enterprise) per month.

Gemini Code Assist: Ranges from $0 (free) to $54 (Enterprise) per month.

I couldn't find any reliable sources to determine the exact limits of free usage, if I do find them I'll update them in this article.

Conclusion

AI models today are still limited in their ability to handle complex projects, as they struggle to retain context when the project size grows. However, their performance is set to improve with advancements in both software efficiency (through techniques like knowledge distillation, pruning, and quantization) and hardware speeds (with specialized AI processors, NPUs, and Hardware-Aware Model Optimization).

These improvements will enable models to manage larger contexts and train on even more data. Combined with techniques like iterative prompting and the ability to run code, detect errors, and use error messages as prompts — as seen in tools like Devin AI (by Cognition Labs) and Claude Code — there's clear evidence that AI in software development still has significant room to grow.

The new Claude 3.7 model (in research preview as of March 2025) is already showing promise, reportedly capable of resolving over 70% of GitHub issues — though it’s not yet available in Copilot Chat.

Software development has undeniably reached stage #4 (Intelligent Automation) in the Automation Maturity Model, and we are steadily moving towards stage #5 (Autonomous Operations).

In the end, those who ride the wave of change will leave those fighting the current stranded on the shore.

Comments

Post a Comment